In this post I'll demonstrate one way to use Bayesian methods to build a 'dynamic' or 'learning' regression model. When I say 'learning,' I mean that it can elegantly adapt to new observations without requiring a refit.

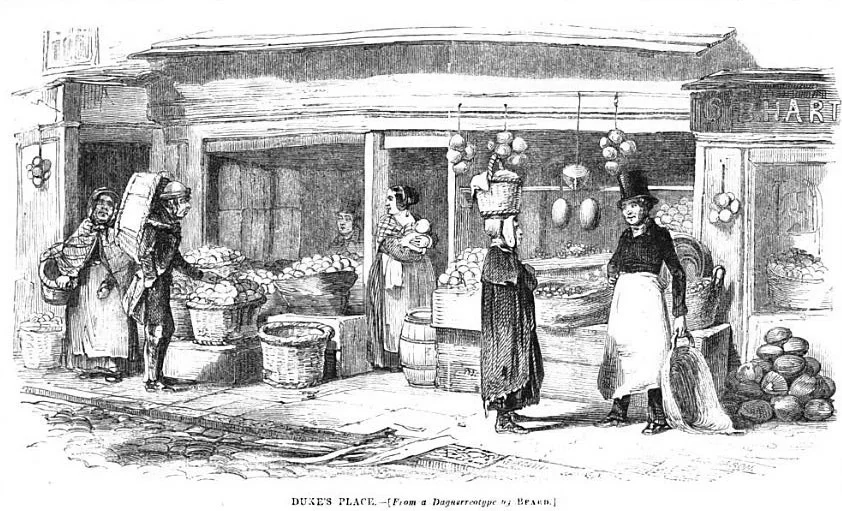

Today we lay our scene in London between the years 1760-1850. The good people of London have bread and they have wheat, which is, of course, what bread is made from. One would expect that the price of bread and the price of wheat are closely correlated and generally they are, though in periods of turmoil things can come unstuck. And Europe, between 1760-1850, was a very eventful place.

Our dataset is sourced from the collection, Consumer price indices, nominal / real wages and welfare ratios of building craftsmen and labourers, 1260-1913, kindly compiled by Robert C. Allen at the University of Oxford's Department of Economics, and it can be downloaded from the International Institute of Social History.

Let's start by having a look at the prices of wheat and bread over this period:

# prepare ipython

%pylab inline

import pandas as pd

from scipy import stats

import pymc as pm

import random

# import data

fileloc = '/data/'

filename = 'London_bread_series.csv'

bread = pd.read_csv(fileloc+filename)

# limit data to period of interest

mask = (bread['year']>=1760) & (bread['year']<=1850)

bread = bread[mask]

# Break into chunks of decades, excepting the first thirty years

bands = [1760,1790,1800,1810,1820,1830,1840,1850]

bread['decade'] = pd.cut(bread['year'], bins = bands)

pd.value_counts(bread['decade'])

listDecade=sort(list(pd.value_counts(bread['decade']).index))

#### Charts

# create colourmap for decades

mycmap = cm.coolwarm

n=len(listDecade)

colnums = [int(i) for i in np.linspace(0,256,n)]

decadeColours = mycmap(colnums)

fig = plt.figure(figsize(16,6), dpi=1600) # specifies the parameters of our graphs

plt.subplots_adjust(hspace=.5) # extra space between plots

gridsize = (2,2)

## Scatterplot of wheat vs. bread prices

ax0 = plt.subplot2grid(gridsize,(0,0),rowspan=2)

sc = ax0.scatter(bread['wheat_ag'], bread['bread_ag'], c=bread['year'], cmap=mycmap, s=35, alpha=.8)

ax0.set_ylim(0,4.5)

ax0.set_xlim(0,3)

plt.colorbar(sc, ax=ax0)

ax0.set_title("Wheat & Bread Prices in London")

ax0.set_xlabel("Wheat Price: Grams of Silver per Litre")

ax0.set_ylabel("Bread Price: Grams of Silver per Kg")

ax0.xaxis.set_ticks_position('none')

ax0.yaxis.set_ticks_position('none')

# add regression line fit to pre-war period

xrangex = np.linspace(0.5,2.5,100)

bread_temp = bread[bread['decade']=='(1760, 1790]']

gradient, intercept, r_value, p_value, std_err = \

stats.linregress(bread_temp['wheat_ag'],bread_temp['bread_ag'])

yhat_1 = intercept + gradient * xrangex

ax0.plot(xrangex,yhat_1, c='blue', label='Regression for 1760-1790')

# add regression line fit to post-war period

mask = (bread['year']>=1820)

bread_temp = bread[mask]

gradient, intercept, r_value, p_value, std_err = \

stats.linregress(bread_temp['wheat_ag'],bread_temp['bread_ag'])

yhat_1 = intercept + gradient * xrangex

ax0.plot(xrangex,yhat_1, c='red', label='Regression for 1820-1850')

ax0.legend(loc=4);

## Time series charts

ax1 = plt.subplot2grid(gridsize,(0,1))#,rowspan=2)

ax1.plot(bread['year'],bread['wheat_ag'],c='red')

ax1.set_title("Wheat Prices in London\n Grams of Silver per Litre")

ax1.xaxis.set_ticks_position('none')

ax1.yaxis.set_ticks_position('none')

ax2 = plt.subplot2grid(gridsize,(1,1))#,rowspan=2)

ax2.plot(bread['year'],bread['bread_ag'],c='blue')

ax2.set_title("Bread Prices in London\n Grams of Silver per Kg")

ax2.xaxis.set_ticks_position('none')

ax2.yaxis.set_ticks_position('none')

(Notice that I'm quoting prices in grams of silver, rather than in the local sterling. This is a lazy attempt to remove (somewhat) the effect of currency devaluation and fluctuation).

Now: from 1760, through the American Revolutionary War, up until the mid-1790s, the prices of wheat and bread in London are fairly steady. And so is the relationship between those prices: see the compact cluster of blue points on the scatterpot. In a typical year, the price of a kilogram of bread is roughly 50g of silver on top of the price of litre of wheat. Then the chaos: the French Revolutionary Wars and their offspring, the Napoleonic Wars; a series of very poor wheat harvests in the 1790s; Napoleon's attempt to lock Britain out of European trade with his Continental System. In London, wheat and bread prices go haywire between 1795-1820. After Waterloo, and Britain's subsequent assumption of global maritime dominance, prices steady again. But the relationship between them has changed. The red points and the red best fit line show that from ~1820, London bread now tends to cost a higher multiple of the cost of wheat.

What are the causes for these fluctuations in prices? There'd be many influences on the supply & demand of both wheat and bread, but that's a question for other blogs. Our goal here is to develop a regression model that predicts the price of bread and, as the years roll by, will automatically adapt to the changing economic relationship with the price of wheat. Basically, we want a model which inititially follows the blue line, but will move to the red line as post-war economic conditions settle in.

Let's bake!

Bayesian modelling using Markov chain Monte Carlo

Before we start: in this post, I just want to focus on demonstrating simply the learning capability of Bayesian models. We're not going to talk about how Markov chain Monte Carlo methods and Bayesian modelling work. The subject is too big and technical for a single blog post. For the reader who wants to understand more, I recommend these excellent resources:

- Doing Bayesian Data Analysis: textbook by John K. Kruschke is lucid and comprehensive, covering everything from Bayes rule through MCMC sampling up to GLMs. And puppies :-) The only downside (for me) was that the programming examples are given in BUGS rather than Python, which is why you would want to couple it with:

- Probabilistic Programming & Bayesian Methods for Hackers: a free, practical guide with lots of worked examples in PyMC. Comes in iPython notebook format so you can easily tinker with it.

- Think Bayes: Those who are new to Bayes may find this book of worked examples helpful, I did. Problems are solved in Python through direct use of Bayes' rule (no MCMC).

Specifying the model

The price of bread ($y$) is normally distributed with mean $\mu$ and variance $\sigma^2$:

$y \sim \mathcal{N}(\mu, \sigma^2) $

Where $\mu$ is a linear function of the price of wheat ($x$):

$\mu = \alpha + \beta \ast x_i $

Each of our parameters $\alpha$, $\beta$ and $\sigma$ are themselves random variables (in probability jargon). We must specify distributional priors for them. Rather than have a static prior for our coefficients, we're going to make the prior depend on the previous decade's posterior:

$\alpha_t \sim \mathcal{N}(\overline{\alpha}_{t-1}, 0.5^2) $

$\beta_t \sim \mathcal{N}(\overline{\beta}_{t-1}, 0.5^2) $

$\sigma \sim \gamma(0.1, 0.1) $

To reiterate: we're going to iterate through our data decade by decade. With each decade, we udpate our posterior estimates for $\alpha$ and $\beta$ and then we take the means from those posteriors and feed them into the priors for the next decade's update. (The standard deviation for $\alpha$ and $\beta$ remains fixed at 0.5. We'll talk about the standard deviation below.)

It's easier to understand when you see the output, so skip on down the the scatterplot with overlaid regression lines.

meanAlpha = 0 # prior mean for Alpha

meanBeta = 0 # prior mean for Beta

stdAlpha = .5 # Standard deviation for Alpha

stdBeta = .5 # Standard deviation for Beta

tracesAlpha = [] # to save MCMC traces for Alpha

tracesBeta = [] # to save MCMC traces for Beta

for decade in listDecade:

bread_temp = bread[bread['decade']==decade]

price_wheat=array(bread_temp['wheat_ag'][isnan(bread_temp['wheat_ag'])==False])

price_bread=array(bread_temp['bread_ag'][isnan(bread_temp['bread_ag'])==False])

# Set prior for beta & alpha

# Fairly constrained - gradient changes little

alpha = pm.Normal('alpha', meanAlpha, 1./(stdAlpha**2), value=meanAlpha)

beta = pm.Normal('beta', meanBeta, 1./(stdBeta**2), value=meanBeta)

# define tau, parameter for precision of bread price distribution

tau = pm.Gamma('tau', alpha=0.1, beta=0.1)

# specify likelihood formula

@pm.deterministic

def mu(price_wheat=price_wheat, beta=beta, alpha=alpha):

return alpha + beta * price_wheat

# Specify probabilistic form of output variable

observed = pm.Normal('observed', mu, tau, value=price_bread, observed=True)

# Tie them together in a PyMC model statement

model = pm.Model([observed, beta, alpha, tau])

## Use MCMC / Metropolis to sample from the posterior distribution

map_ = pm.MAP(model)

map_.fit()

mcmc = pm.MCMC(model)

mcmc.sample(60000, 10000, 2) # 10k burn in, thin every 2nd

# extract samples for random variables

alpha_samples = [i for [i] in mcmc.trace('alpha')[:, None]] # extract posterior samples of beta

beta_samples = [i for [i] in mcmc.trace('beta')[:, None]] # extract posterior samples of beta

# save trace

tracesAlpha.append(alpha_samples)

tracesBeta.append(beta_samples)

# Update prior assumptions about alpha and beta

meanAlpha = mean(alpha_samples)

meanBeta = mean(beta_samples)

##### CHARTS

fig = plt.figure(figsize(16,6), dpi=1600) # specifies the parameters of our graphs

plt.subplots_adjust(hspace=.5) # extra space between plots

gridsize = (7,4)

# Scatterplot points

ax1 = plt.subplot2grid(gridsize,(0,0), rowspan=7, colspan=2)

ax1.scatter(bread['wheat_ag'], bread['bread_ag'], c=bread['year'], cmap=mycmap, s=35, alpha=.7)

ax1.set_ylim(0,4.5)

ax1.set_xlim(0,3)

ax1.set_title("Wheat & Bread Prices in London")

ax1.set_xlabel("Wheat Price: Grams of Silver per Litre")

ax1.set_ylabel("Bread Price: Grams of Silver per Kg")

ax1.xaxis.set_ticks_position('none')

ax1.yaxis.set_ticks_position('none')

# Plot regression lines with a posteriori alpha & beta

xrangex2 = array([[i] for i in xrangex])

for (traceAlpha,traceBeta,colour,decade) in zip(tracesAlpha,tracesBeta,decadeColours, listDecade):

y = mean(traceAlpha + xrangex2 * traceBeta, axis=1)

ax1.plot(xrangex, y, '-', color=colour, alpha=1, lw=1.5, label=decade)

ax1.legend(loc=4);

# histograms of posteriors for both alpha & beta

for i,j,k in zip(tracesAlpha,decadeColours,range(7)):

ax1 = plt.subplot2grid(gridsize,(k,2))

ax1.hist(i, histtype='stepfilled', bins=30, alpha=0.3, color=j, normed=True)

ax1.set_xlim(-1,3)

ax1.set_ylim(0,2.5)

ax1.xaxis.set_ticks_position('none')

ax1.yaxis.set_ticks_position('none')

ax1.yaxis.set_visible(False)

if k==0:

ax1.set_title(r'$\alpha$ posteriors by decade')

for i,j,k in zip(tracesBeta,decadeColours,range(7)):

ax1 = plt.subplot2grid(gridsize,(k,3))

ax1.hist(i, histtype='stepfilled', bins=30, alpha=0.3, color=j, normed=True)

ax1.set_xlim(-1,3)

ax1.set_ylim(0,2.5)

ax1.xaxis.set_ticks_position('none')

ax1.yaxis.set_ticks_position('none')

ax1.yaxis.set_visible(False)

if k==0:

ax1.set_title(r'$\beta$ posteriors by decade')

The coloured lines show regression defined by the posterior $\alpha$ and $\beta$ at each decade. At 1790 we start where the dark blue line is. Over the decades the line gradually moves upward and the gradient becomes more positive, eventually settling where the dark red line is in 1850. The gradient is determined by the posterior distribution of $\beta$: you can see the distribution edging rightward in the histograms.

Did you notice that the final red line for 1840-1850 has a different slope to the simple OLS regression line I fit at the start of this post? This brings us to an interesting capability of Bayesian modelling: our ability to constrain the way that the model adapts via the limits we place on the prior.

If the prior distribution is very narrow, then it constrains the dimensions of the posterior: basically, it limits how far the regression line can move in a decade. In the above example, I've kept the prior distributions fairly narrow at each step, and that's why you see only very small changes in the gradient of the line. This constraint can be applied via the precision/variance of the prior distribution.

Let's try the exercise again, but let's make the variance of the priors for $\alpha$ and $\beta$ larger, and thereby give them more room to move in each step. Here' we're running the exact same code, except with the stdAlpha & stdBeta variables, which set the standard derviation of those distributions, enlarged.

meanAlpha = 0 # prior mean for Alpha

meanBeta = 0 # prior mean for Beta

stdAlpha = 5 # Standard deviation for Alpha

stdBeta = 5 # Standard deviation for Beta

tracesAlpha = [] # to save MCMC traces for Alpha

tracesBeta = [] # to save MCMC traces for Beta

for decade in listDecade:

bread_temp = bread[bread['decade']==decade]

price_wheat=array(bread_temp['wheat_ag'][isnan(bread_temp['wheat_ag'])==False])

price_bread=array(bread_temp['bread_ag'][isnan(bread_temp['bread_ag'])==False])

# Set prior for beta & alpha

# Fairly constrained - gradient changes little

alpha = pm.Normal('alpha', meanAlpha, 1./(stdAlpha**2), value=meanAlpha)

beta = pm.Normal('beta', meanBeta, 1./(stdBeta**2), value=meanBeta)

# define tau, parameter for precision of bread price distribution

tau = pm.Gamma('tau', alpha=0.1, beta=0.1)

# specify likelihood formula

@pm.deterministic

def mu(price_wheat=price_wheat, beta=beta, alpha=alpha):

return alpha + beta * price_wheat

# Specify probabilistic form of output variable

observed = pm.Normal('observed', mu, tau, value=price_bread, observed=True)

# Tie them together in a PyMC model statement

model = pm.Model([observed, beta, alpha, tau])

## Use MCMC / Metropolis to sample from the posterior distribution

map_ = pm.MAP(model)

map_.fit()

mcmc = pm.MCMC(model)

mcmc.sample(60000, 10000, 2) # 10k burn in, thin every 2nd

# extract samples for random variables

alpha_samples = [i for [i] in mcmc.trace('alpha')[:, None]] # extract posterior samples of beta

beta_samples = [i for [i] in mcmc.trace('beta')[:, None]] # extract posterior samples of beta

# save trace

tracesAlpha.append(alpha_samples)

tracesBeta.append(beta_samples)

# Update prior assumptions about alpha and beta

meanAlpha = mean(alpha_samples)

meanBeta = mean(beta_samples)

##### CHARTS

fig = plt.figure(figsize(16,6), dpi=1600) # specifies the parameters of our graphs

plt.subplots_adjust(hspace=.5) # extra space between plots

gridsize = (7,4)

# Scatterplot points

ax1 = plt.subplot2grid(gridsize,(0,0), rowspan=7, colspan=2)

ax1.scatter(bread['wheat_ag'], bread['bread_ag'], c=bread['year'], cmap=mycmap, s=35, alpha=.7)

ax1.set_ylim(0,4.5)

ax1.set_xlim(0,3)

ax1.set_title("Wheat & Bread Prices in London")

ax1.set_xlabel("Wheat Price: Grams of Silver per Litre")

ax1.set_ylabel("Bread Price: Grams of Silver per Kg")

ax1.xaxis.set_ticks_position('none')

ax1.yaxis.set_ticks_position('none')

# Plot regression lines with a posteriori alpha & beta

xrangex2 = array([[i] for i in xrangex])

for (traceAlpha,traceBeta,colour,decade) in zip(tracesAlpha,tracesBeta,decadeColours, listDecade):

y = mean(traceAlpha + xrangex2 * traceBeta, axis=1)

ax1.plot(xrangex, y, '-', color=colour, alpha=1, lw=1.5, label=decade)

ax1.legend(loc=4);

# histograms of posteriors for both alpha & beta

for i,j,k in zip(tracesAlpha,decadeColours,range(7)):

ax1 = plt.subplot2grid(gridsize,(k,2))

ax1.hist(i, histtype='stepfilled', bins=30, alpha=0.3, color=j, normed=True)

ax1.set_xlim(-1,3)

ax1.set_ylim(0,2.5)

ax1.xaxis.set_ticks_position('none')

ax1.yaxis.set_ticks_position('none')

ax1.yaxis.set_visible(False)

if k==0:

ax1.set_title(r'$\alpha$ posteriors by decade')

for i,j,k in zip(tracesBeta,decadeColours,range(7)):

ax1 = plt.subplot2grid(gridsize,(k,3))

ax1.hist(i, histtype='stepfilled', bins=30, alpha=0.3, color=j, normed=True)

ax1.set_xlim(-1,3)

ax1.set_ylim(0,2.5)

ax1.xaxis.set_ticks_position('none')

ax1.yaxis.set_ticks_position('none')

ax1.yaxis.set_visible(False)

if k==0:

ax1.set_title(r'$\beta$ posteriors by decade')

Now you can see that the gradient of subsequent regression lines varies. The histograms of posteriors are also wider, and their means move further in each decade.

Comments on subjectivity & usefulness in Bayesian modelling

Some readers may find it distasteful that the results of this "mathematical modelling" vary with subjective or arbitrary choices like the prior standard deviation. We might like to think that it should be somehow "objective." But the prior exerts a powerful influence over Bayesian modelling. A prior must be specified, and we often have no choice but to specify it with guesswork and/or parameters that are more pragmatic than "correct." It's worth noting that choices made by the Frequentist modeller are no less subjective or influential on their results. Although they need not specify a prior, they still must make choices like, for example, specifying a model formula or governing distributions. At any rate, that's missing the point. We should daily remind ourselves of George E. P. Box's dictum that, "Essentially, all models are wrong, but some are useful." I think the task of the applied statistician / machine learning practitioner is to be as useful as possible, not as correct as possible. Being able to control the "rate of learning" is a very useful thing, even if our justification for our choice of parameters may be extraneous to the data, such as contextual knowledge or even, horror of horrors, intuition.

A model that adapts gradually can be thought of one that is less influenced by outliers. By controlling the rate of learning, we implement models that adapt as rapidly or as slowly as we judge appropriate to circumstances. In the case of London's bread prices, I would suggest that the chaotic prices we see between 1800-1820 do not reflect long term (or short term) trends and we don't want our predictions to be tossed about by the storm. We want it to adapt gracefully and gradually, because that is how we understand/imagine that macroeconomic forces work: slowly. (Economists, feel free to weigh in on this...) We believe that the war years - where prices go dramatically off the recent trend - are outliers, and we don't want them to exert a strong influence ove our model. We expect things will settle down again into a steady state, likely not too far from where we started. And so we find that constraining that by, in this case, the precision parameter of our priors, is very useful.